Face recognition technology is becoming more and more mature, and commercial applications are becoming more widespread. In order to prevent malicious people from forging or stealing the facial features of others for identity authentication, the face recognition system needs to have a live detection function to determine whether the submitted facial features come from a living real individual.

Face recognition technology is becoming more and more mature, and commercial applications are becoming more widespread. However, the human face is easily copied by means of photos, videos, and even simulation molds. By preparing these “props” in advance, the malicious person tries to disguise in the identification process in order to pass verification and achieve the purpose of illegal intrusion. In order to prevent malicious people from forging or stealing the facial features of others for identity authentication, the face recognition system needs to have a live detection function to determine whether the submitted facial features come from a living real individual.

Basic principles of face live detection

The basic function of face access control is Face Verification, and live detection belongs to Face Anti-Spoofing. Face authentication and face anti-counterfeiting, both technologies have their own focuses.

Face verification: Face verification is an algorithm to determine whether two face pictures are the same person, that is, to obtain the similarity between two face features through face comparison, and then compare it with a preset threshold. If the similarity is greater than the threshold, then The same person, otherwise the opposite. This is a very popular research direction in recent years, and a large number of algorithm models and loss functions have also been generated.

Face anti-counterfeiting: When the user brushes his face, the algorithm should identify whether this face is a real human face, and the algorithm should reject photos, videos, and prosthetic masks.

1.Photo attack and live detection

Photos are the simplest form of attack. Using social media, such as WeChat friends or Weibo, you can easily get photos of related people. But the photo is static after all, and you ca n’t blink, open your mouth, or turn your head. Using this feature, the living body detection system can issue several movement instructions, and by judging the compliance of the detected person's movement, it can realize interactive living body detection.

In order to deal with motion detection, some attackers improved the photo camouflage, printed another person's photo at the actual size, hollowed out the eyes and mouth of the photo, and stuck it on the face to expose the eyes and mouth. Blink, open your mouth, turn your head, etc. according to the instructions of the living body detection system. However, the effect of this forgery is far from the actual movement of the real face, and it is easily recognized by the detection algorithm.

2.Video attack and live detection

Video attacks record another person's actions into a video and play it against the detection system. However, the screen of the player is imaged by the camera, and the face of the player is also significantly different from the real person. The most obvious is the presence of moiré, reflections, reflections, blurred image quality, and distortion. What's more, there is also a problem that the video being played and the motion instruction are out of sync.

3. Prosthetic attack and live detection

The prosthesis attack is to make a three-dimensional mask similar to a real person, which solves the planarity defect of photo and video playback. There are many types of prostheses. The most common ones are masks made of plastic or hard paper. The cost is low, but the similarity of the material is very low, which can be identified by ordinary texture features. More advanced are silicone, latex and 3D printed three-dimensional masks. The appearance of these masks is close to the skin, but the surface reflectance of the material and the real face are still different, so there are still differences in imaging.

Common methods for face access control live detection

The motion living body detection method has high security, but requires the user to cooperate with the specified action, so the actual user experience is poor. In order to achieve the effect of non-sensing traffic, human body access control rarely uses motion detection in response to instructions, and usually performs live discrimination based on the difference between images and optical effects.

1.Ordinary camera live detection

Although there is no action response in accordance with the instructions, the real human face is not absolutely static, and there are always some micro-expressions, such as the rhythm of the eyelids and eyeballs, blinking, the expansion and contraction of lips and the surrounding cheeks. At the same time, the reflection characteristics of real human faces are different from those of attack media such as paper, screens, and three-dimensional masks, so the imaging is also different. With the detection based on features such as moiré, reflections, reflections, and textures, Yushi's detection system can easily deal with attacks on photos, videos, and prostheses.

Using a certain physical feature or a fusion of multiple physical features, we can train a neural network classifier through deep learning to distinguish between live and attack. The physical features in live detection are mainly divided into texture features, color features, frequency spectrum features, motion features, image quality features, and also include heartbeat features. There are many texture features, but the most mainstream are LBP, HOG, LPQ, etc.

In addition to RGB color features, academia has found that HSV or YCbCr has better performance in distinguishing living and non-living, and is widely used for different texture features.

The principle of spectral characteristics is that the living body and non-living body have different responses in certain frequency bands.

Motion feature extraction of target changes at different times is an effective method, but it usually takes a long time and does not meet the real-time requirements.

There are many ways to describe image quality characteristics, such as reflection, scattering, edges, or shapes.

2. Infrared camera live detection

Infrared face live detection is mainly based on optical flow method. Optical flow method uses the time-domain change and correlation of pixel intensity data in the image sequence to determine the "motion" of each pixel position, that is, to obtain the running information of each pixel from the image sequence. It uses Gaussian difference filters and LBP features Perform statistical analysis with support vector machines.

At the same time, the optical flow field is relatively sensitive to object movements, and the optical flow field can be used to detect eye movements and blinks in a unified manner. This kind of living body detection method can realize blind measurement without user cooperation.

From the comparison of the two pictures above, it can be seen that the optical flow features of the living face are displayed as irregular vector features, while the optical flow features of the photo face are regular and ordered vector features. photo.

3D camera live detection

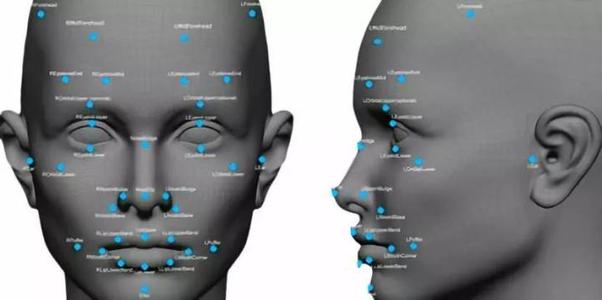

The human face is captured by a 3D camera, and the corresponding 3D data of the face area is obtained. Based on these data, the most distinguishing features are selected to train the neural network classifier. Finally, the trained classifier is used to distinguish between living and non-living. The selection of features is very important. The features we choose contain both global and local information. Such selection is beneficial to the stability and robustness of the algorithm.

3D face live detection is divided into the following 3 steps:

First, extract three-dimensional information of N (recommended 256) feature points of living and non-living face regions, and perform preliminary analysis and processing on the geometric structure relationship between these points;

Second, extract the three-dimensional information of the entire face area, further process the corresponding feature points, and then use the coordinated training Co-training method to train the positive and negative sample data, and use the obtained classifier for initial classification;

Finally, the feature points extracted from the above two steps are used to fit the surface to describe the characteristics of the 3D model. The convex areas are extracted from the depth image according to the curvature of the surface, the EGI features are extracted for each area, and then the spherical correlation is used. Reclassify and identify.

Source: Security Knowledge Network Link:http://www.iotworld.com.cn/html/News/201904/9d4c441c85ad707c.shtml